2 June 2020

Yulai Xie, Ph.D.

Hitachi (China) Research & Development Corporation

Currently, public transportation vehicles, such as buses, are equipped with many on-board cameras mainly for security purposes. If automatic passenger flow estimation can be realized using existing on-board cameras, the efficiency of public transportation services can be improved by optimizing the route plan and traffic schedule, thus benefitting public transportation system operation. To realize this purpose, we designed a passenger counting system based on the spatial-temporal context (STC) tracking (space and time tracking) and the convolutional neural network (CNN) detection (image-based object detection). Meanwhile, inspired by the movement of ants in nature, we explored the movement information to build a biologically inspired pheromone map (an insect’s location mechanism by secreted chemical materials) to enhance counting robustness. The experimental results we obtained on a real public bus transportation dataset showed that this method outperforms some existing methods.

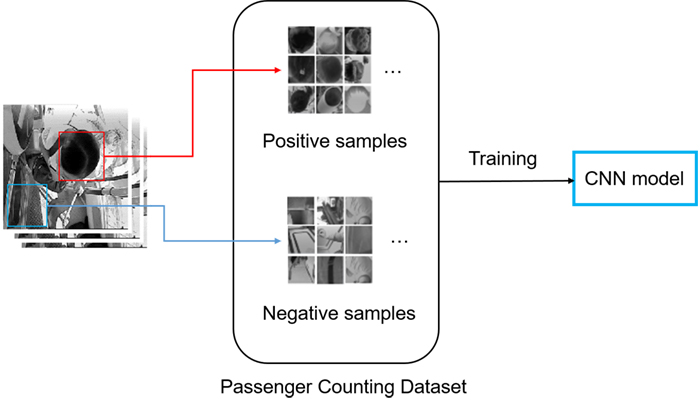

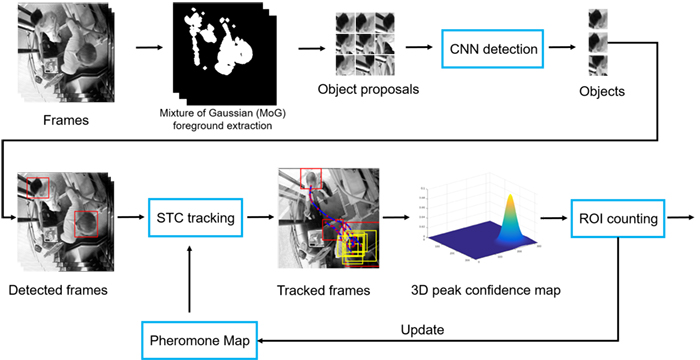

Figure 1 shows a new data set obtained from the very complex public transportation scenes. This dataset which contains manually selected positive passenger head samples and negative ones, is used for training the CNN model for detecting passengers. Figure 2 shows the passenger counting framework which is used to count the passengers in the videos. This framework includes passenger detection by the CNN model, STC tracking and biologically inspired pheromone map module

Figure 1: The passenger counting dataset

Figure 2. The framework of the passenger counting framework

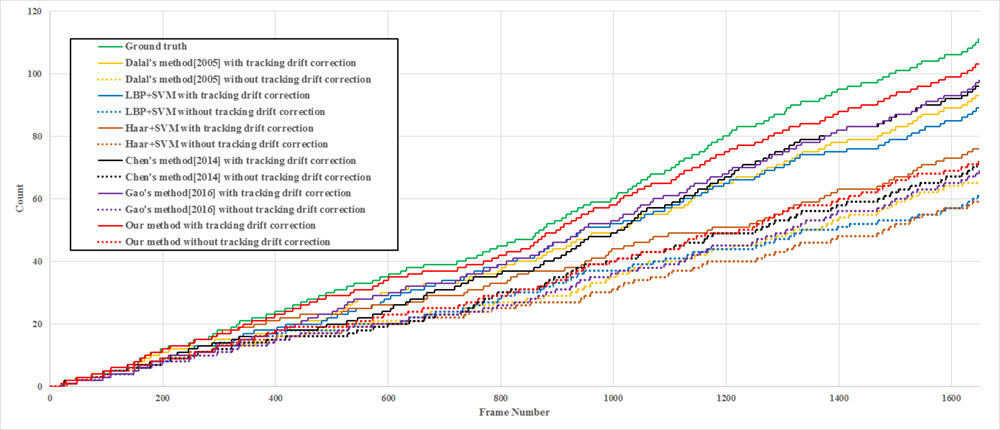

The dataset is collected from real surveillance video of the public bus transportation in China. Our method is applied to determine the passenger flow in a bus transportation scene. Ground-truth was built by manually counting the passengers getting on/off the bus in surveillance video. To quantitatively evaluate the performance of our method compared with other methods, we applied six algorithms to 12 video clips in real bus transportation scenes, as shown in Figure 3. We also compared the results with/without tracking drift (a cumulative tracking error) correction by the biologically inspired pheromone map module in Figure 3. The results showed that our method realizes a higher accuracy of 64.66% without tracking drift correction. The results were further enhanced when tracking drift correction was applied, achieving an even higher accuracy of 93.10%.

Figure 3:Comparison of our passengers counting method and the five other methods on the test set with 1,636 test images.

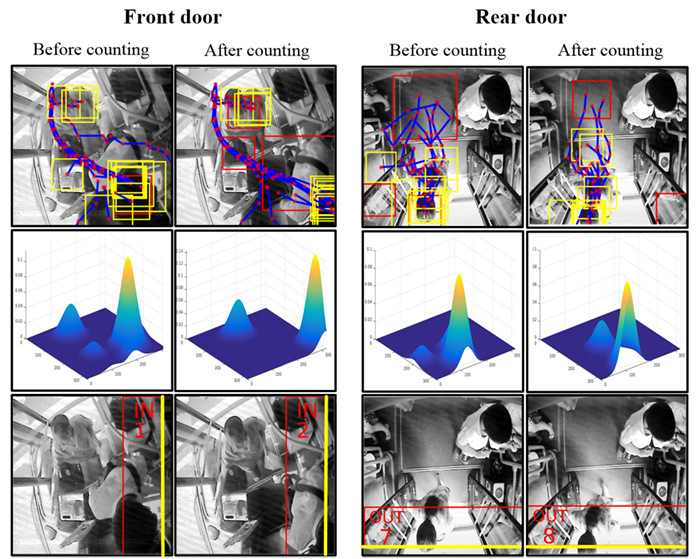

Figure 4 shows the passenger counting process before counting and after counting at the front and rear door.

Figure 4:Illustration of passenger counting in real scenes.

The system uses a single inexpensive camera mounted overhead, which eliminates the need for calibration and creates a low-cost system. We integrated STC tracking and CNN detection to count passengers effectively and efficiently. To ensure the robustness, a biologically inspired pheromone map is used to correct the tracking drift. Experimental results showed that this method has better performance than many existing methods. The system can also be applied in other public scenes, such as subway stations and parks, to provide passenger flow estimation in public space management.

For more details, we encourage you to read our paper, “Passenger flow estimation based on convolutional neural network in public transportation system.” It can be found in https://www.sciencedirect.com/science/article/abs/pii/S0950705117300849

Thanks to my co-authors Guojin Liu, Zhenzhi Yin and Yunjian Jia from Chongqing University, with whom this research work was jointly executed.

Dalal's method [2005]: Dalal, N., Triggs, B. Histograms of oriented gradients for human detection. IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'05). IEEE, 2005, 1: 886-893.

Chen's method [2014]: Chen, L., Wu, H., Zhao, S. et al. Head-shoulder detection using joint HOG features for people counting and video surveillance in library. Electronics, Computer and Applications, 2014 IEEE Workshop on. IEEE, 2014: 429-432.

Gao's method [2016]: Gao, C., Li, P., Zhang, Y. et al. People counting based on head detection combining Adaboost and CNN in crowded surveillance environment. Neurocomputing, 2016.